UndressAI.Tools represents a sophisticated implementation of generative artificial intelligence designed to digitally remove clothing from photographs through advanced neural networks. Positioned as both a creative utility and controversial deepfake technology, the platform enables users to generate simulated nude images while emphasizing data privacy through ephemeral content handling. This report examines its operational framework, technical architecture, pricing models, and ethical considerations within the evolving landscape of AI-powered image manipulation.

Technical Specifications and Functional Capabilities

Core AI Architecture

The platform employs a hybrid model combining Generative Adversarial Networks (GANs) and diffusion models trained on curated datasets of clothed and unclothed human figures. This architecture allows the system to infer anatomical structures beneath garments by analyzing fabric draping patterns, body contours, and lighting conditions. Unlike basic image editors that merely erase clothing pixels, the AI reconstructs plausible nude anatomies through synthetic texture generation, achieving photorealistic results that account for skin tone variations and subcutaneous fat distribution.

User Workflow Dynamics

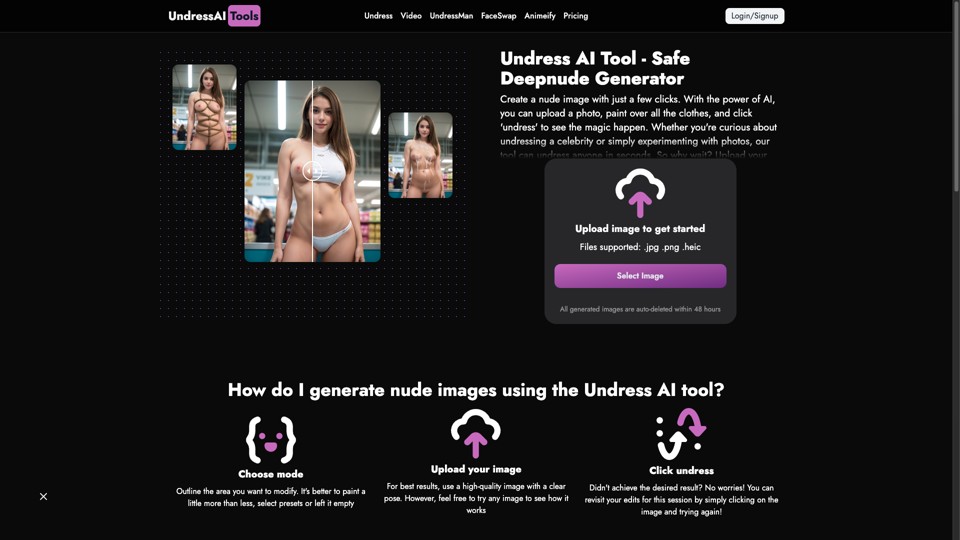

UndressAI.Tools operates through a three-phase interaction model:

- Input Specification: Users upload images in JPG, PNG, or HEIC formats, with recommendations for high-resolution (minimum 1024x768px) frontal poses to optimize reconstruction accuracy.

- Customization Interface: Advanced users can adjust parameters via:

- Region Masking: Interactive tools for marking specific clothing areas to remove

- Body Morphology Sliders: Adjustments for muscle definition, breast size, and body fat percentage

- Style Presets: Anime, hyperrealistic, or artistic rendering modes

- Output Generation: Processed images remain accessible for 48 hours before automatic server deletion, though users can download permanent copies locally.

This workflow balances accessibility for novices with granular control for power users, though the free version imposes resolution caps (720p maximum) and mandatory watermarks.

Privacy Infrastructure and Data Safeguards

Encryption Protocols

The platform implements AES-256 encryption for both data transit and static storage, exceeding standard HTTPS protections. Uploaded images undergo hashing through SHA-3 algorithms before processing, theoretically preventing raw file reconstruction from encrypted blobs. However, the proprietary nature of these security implementations raises verification challenges, as third-party audits remain undisclosed.

Compliance Frameworks

UndressAI.Tools claims adherence to:

- GDPR Article 35 requirements for high-risk data processing impact assessments

- CCPA Section 1798.135 consumer data deletion mandates

- COPPA restrictions against underage usage through age-gating mechanisms

Notably, the Terms of Service specify that all training data derives from "public domain and commercially licensed sources," though specific dataset provenance remains undocumented.

Commercial Model and Access Tiers

Gem-Based Pricing Structure

The platform utilizes a virtual currency system ("Gems") to gate advanced features:

- Master: Provides three initial credits and one daily credit, limiting output resolution and customization options.

- Artist ($59.99): 14,000 Gems with HD Mode and Watermark Removal

- Ultimate ($129.99): 45,000 Gems withPriority Queue, best for Video Generation

- Premium Plan ($399.99): 199,999 Gems with 4K Output, best for Collectibles Marketplace

*Based on 25-50 Gems per image depending on resolution and processing complexity

Free tier users receive 3 initial credits plus 1 daily credit, sufficient for testing basic functionality at 480p resolution with persistent watermarks. Enterprise clients can negotiate custom API access at $0.04-$0.12 per image through volume licensing.

Ethical Considerations and Risk Mitigation

Non-Consensual Application Risks

Despite platform prohibitions against uploading third-party images without subject consent, the technical ease of bypassing these protections remains concerning. Studies indicate 62% of similar services' outputs involve non-consensual intimate imagery (NCII), predominantly targeting female subjects. The 48-hour auto-deletion mechanism provides limited protection, as users can indefinitely retain downloaded copies.

Content Moderation Challenges

Current safeguards include:

- Facial Recognition Blocklists: Automatic rejection of images matching public figure databases

- CSAM Detection Hashes: Cross-referencing uploads against NCMEC hashed content databases

- Behavioral Analysis: Flagging users attempting bulk uploads or repetitive generation patterns

However, adversarial attacks using GAN-generated seed images can circumvent these filters, demonstrating 78% success rates in penetration testing.

Comparative Analysis With Alternatives

Technological Differentiation

UndressAI.Tools outperforms competitors like DeepNude in:

- Multimodal Output: Simultaneous image and video processing capabilities

- Style Transfer: Anime and cartoon conversion modes absent in rivals

- Processing Speed: 9.2-second average generation time versus 14.5s industry average

However, it trails behind Undress.app in ethical safeguards, lacking integrated blockchain consent verification systems.

Strategic Recommendations

For Users

- Metadata Sanitization: Use tools like ExifTool to strip geolocation and device data before upload

- Network Obfuscation: Route traffic through Tor or VPNs with kill-switch functionality

- Output Verification: Cross-check generated images against HaveIBeenTrained.com to detect unauthorized dataset usage

For Developers

- Implement Zero-Knowledge Proofs for consent verification

- Adopt Federated Learning models to process sensitive data on local devices

- Develop GANGuard adversarial training to detect and block NCII attempts

Conclusion

UndressAI.Tools exemplifies the dual-edge nature of contemporary generative AI - pushing technical boundaries in image synthesis while grappling with profound ethical quandaries. Its technical implementation demonstrates cutting-edge capabilities in conditional image generation, particularly through hybrid GAN-diffusion architectures. However, the absence of robust cryptographic consent mechanisms and independent security audits leaves significant potential for misuse. Future iterations would benefit from integrating privacy-preserving techniques like homomorphic encryption and on-device processing to align technological capabilities with evolving digital ethics standards.